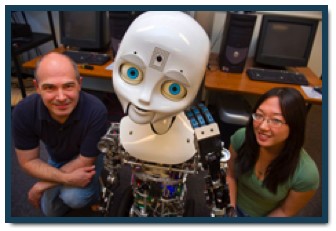

The robot in the image is Nexi, MIT's creation that can express the facial characteristics of a handful of emotions. Another robot, the Kansei robot (created by Junichi Takeno, et al) can access information and display emotions with its ultra-expressive face.

These are cool robots, but none of this can actually simulate human emotions (not only are they embodied - physically, culturally, and environmentally - but they are also shaped by a lifetime of experience and memory.

Kanei looks through its database of more than 500,000 words and then correlates them with basic emotions, like happiness and sadness. The database groups words together based on news and other information.

For example, when Kansei hears the word "president," it finds associated words like "Bush," "war" and "Iraq." Thus, the word president causes Kansei to assume an expression somewhere between fear and disgust. The word "sushi" on the other hand, elicits expressions of enjoyment.

Kansei, which means "sensibility" and "emotion" in Japanese, also contains speech recognition software, a speaker to vocalize, and motors that contort artificial skin on its face into expressions.

I guess it's an interesting and useful thought experiment, if nothing else.

Citation:The other half of the embodied mind

- Laboratory of Artificial Life and Robotics, Institute of Cognitive Sciences and Technologies, National Research Council, Rome, Italy

Embodied theories of mind tend to be theories of the cognitive half of the mind and to ignore its emotional half while a complete theory of the mind should account for both halves. Robots are a new way of expressing theories of the mind which are less ambiguous and more capable to generate specific and non-controversial predictions than verbally expressed theories. We outline a simple robotic model of emotional states as states of a sub-part of the neural network controlling the robot’s behavior which has specific properties and which allows the robot to make faster and more correct motivational decisions, and we describe possible extensions of the model to account for social emotional states and for the expression of emotions that, unlike those of current “emotional” robots, are really “felt” by the robot in that they play a well-identified functional role in the robot’s behavior.

Parisi D (2011) The other half of the embodied mind. Frontiers in Cognition, 2:69. doi: 10.3389/fpsyg.2011.00069

Here is the introduction to this paper - it provides a solid foundation for what Parisi is doing here. The paper can be read online or downloaded as a PDF.

Introduction

In the Western cultural tradition the mind tends to be viewed as separated from the body and, in accordance with this tradition, the sciences of the mind try to understand the mind with no reference to the body. In the last few decades, however, this has changed. The cumulative and fast advances of the sciences of the body (neurosciences, evolutionary biology, genetics, the biological sciences more generally) make all attempts at studying the mind while ignoring the body less and less plausible. In fact, the idea that the mind is embodied and that to understand the mind it is necessary to take the body into consideration is being accepted by an increasing number of researchers and constitutes the premise of many important current investigations (Barsalou, 1999, 2008; Robbins and Aydede, 2009). The embodied view of the mind has led to a recognition of the importance of the actions with which the organism responds to the stimuli in determining how the world is represented in the organism’s mind, in contrast to the traditional emphasis on mental representations as either entirely abstract or derived only from sensory input. This action-based view of the mind underlies a number of important ideas such as the grounding of symbols in the interactions of the organism with the physical environment (Harnad, 1990), the mental (neural) “simulation” of actions as a crucial component of all sorts of understanding (Gallese et al., 1996; Rizzolatti and Craighero, 2004), the mental representation of objects in terms not of their sensory properties but of the actions that the objects make possible (affordances; Gibson, 1977), the action-based nature of categories (Borghi et al., 2002; Di Ferdinando and Parisi, 2004). The embodied view of the mind is also reflected in computational models which reproduce the part of the body more directly linked to the mind, i.e., the brain (artificial neural networks) and, more recently, the entire body of the organism (robotics), and which have abandoned the disembodied view of the mind which is at the basis of artificial intelligence and of conceptions of the mind as symbol manipulation.

However, although the study of the mind can greatly benefit from an embodied conception of the mind, it still has to free itself from another tradition of Western culture which constitutes an obstacle to a complete understanding of the mind: the mind tends to be identified with cognition, that is, with knowing, reasoning, deciding, and acting. But cognition is only half of the mind. The other half of the mind is its emotional half and, although the two halves of the mind continuously interact and behavior is a result of both halves, no satisfactory account of the mind can be provided if the science of the mind is only “cognitive” science. Today one speaks of “embodied cognition,” “grounded cognition,” and the mental representation of objects in terms of the actions with which organisms respond to them. But organisms, including humans, do not only have knowledge, goals, and the capacity to act. They also have motivations and emotional states which play a crucial role in their behavior. Current embodied views of the mind tend to be concerned with the cognitive half of the mind but an embodied account of the mind must be extended to the other half of the mind, its emotional half and, in fact, some psychologists and neuroscientists are trying to extend the embodied conception of the mind to emotions (see, for example, Gallese, 2008; Freina et al., 2009; Glenberg et al., 2009).

Even if one assumes that the mind generally does not contain anything which is unrelated to sensory input and motor output (which is what embodied theories assume), the cognitive and the emotional halves of the mind may not function in the same way. Input to the brain can be input from the environment but also input from inside the body, and output from the brain can be external motor output but also changes in the internal organs and systems of the body, and these different sensory inputs and motor outputs may have different characteristics and consequences. This is why we need models that capture both the cognitive half and the emotional half of the mind and their interactions. These models should explicitly indicate both similarities and differences between embodied cognition and embodied emotion.

This also applies to the study of the mind through the construction of computational models or robots. Robots are the most appropriate tools for exploring embodied theories of the mind because, although in a very simplified form, they reproduce the body of organisms and the physical organ that controls the organisms’ behavior (neuro-robots), and this is true for both physically realized robots and for robots which are simulated in a computer. However, current robots mostly try to reproduce the cognitive half of the mind but they ignore its emotional half. The robots displace themselves in the environment, move their arms and reach for objects, turn their eyes and their face, but they do not have emotions. Some current robots produce postures and movements of their bodies (mostly, the face) that in humans express emotions and they can recognize the expressed emotions of humans as a purely perceptual task, but they cannot be said to really have emotions and to really understand the emotions of others (Picard, 2000, 2003; Breazeal, 2002; Adolphs, 2005; Canamero, 2005; Dautenhahn et al., 2009; Robinson and el Kaliouby, 2009; cf. Arbib and Fellous, 2004; Fellous and Arbib, 2005). (For an attempt at understanding the functional role of emotions in behavior, see Ziemke, 2008.). The reason is quite simple. The cognitive half of the mind is the result of the interactions of the brain with the external environment or of processes self-generated inside the organism’s brain (mental life). Current robots have artificial brains which interact with the external environment and, in some cases, can even self-generate inputs and respond to these self-generated inputs (Mirolli and Parisi, 2006, 2009; Parisi, 2007). But current robotics is an external robotics: robots reproduce the external morphology of an organism’s body, the organism’s sensory and motor organs, and the interactions of the organism’s brain with the external environment. In contrast, the emotional half of the mind is the result of the interactions of the organism’s brain with the organism’s body and with the organs and systems that are inside the body. If we want to construct robots that can be said to really have emotions, what is needed is an internal robotics, that is, robots that have internal organs and systems with which the robot’s brain can interact (Parisi, 2004). Only an internal robotics can help us to better understand the emotional half of the mind and to construct a complete embodied theory of the mind.

Computational models and, more specifically, robotic models are important to understand the mind. Theories in psychology tend to be expressed verbally but verbally expressed theories have limitations because words often have different meanings for different people and because verbally expressed theories may be unable to generate specific, detailed, and non-controversial predictions. Robots are an alternative way of expressing theories. The theory is used to construct a robot and therefore, in a sense, it can be directly observed and it can contain no ambiguity because otherwise the robot cannot be constructed. Furthermore, the theory generates many specific, detailed, and uncontroversial predictions which are the behaviors exhibited by the robot. These predictions can be empirically validated by comparing them with all sorts of empirical facts: the results of behavioral experiments, data on the ecology and past evolutionary history of the organism, and data on the organism’s body and brain.

As we have said, robotic models are especially appropriate for formulating embodied theories of the mind because, by definition, a robot has a body and the robot’s behavior clearly depends on its having a body. Furthermore, since the brain is part of the body, to be consistent robots should be neuro-robots, that is, robots whose behavior is controlled by a system that resembles the structure and functioning of the brain, i.e., an artificial neural network. This has the advantage that it becomes possible to examine the internal representations contained in the robot’s “brain” (the patterns of activation and successions of patterns of activation in the robot’s neural network) and to determine if they are embodied or non-embodied representations, i.e., if they reflect the robot’s actions and the reactions of the robot’s internal organs and systems to sensory input rather than the sensory input. (This is more difficult to do with “emotional” robots which are not controlled by neural networks but by symbolic systems such as those of Breazeal and Brooks, 2005.)

What we will do in this paper is describe a number of simple robots that may help us to construct an entire theory of mind as made up of a cognitive half and an emotional half.

No comments:

Post a Comment